The reality is, a lot of the time, most of us haven’t got a clue what we’re doing. A growth mindset lets you collectively acknowledge this elephant in the room and it lets you deal with it in a systematic way that improves your odds with the resources available.

The biggest difference between brand marketing and growth is that growth is driven by experimentation. The idea is that you constantly experiment with different ideas, programs, campaigns, features to continually eke out improvements.

The key is being able to run lots of experiments over time. You’ll probably get most of them wrong. But you only need a few to work each quarter. Moving things by 2% points each time adds up.

The lean methodology is all about coming up with hypotheses and figuring out the fastest way to test the best ones. If you’re wrong, you’re never heavily invested in something nobody wants.

With traditional brand marketing you flesh out one idea, end to end, and then put all your chips on it. If you’re right, you win big. If you’re wrong, there’s no second chance because you put all of your chips on one big campaign.

Three types of skills you need to get good at growth#

There’s expertise, analytics and strategy. Expertise just means understanding how a marketing channel works and having some experience with it.

The most important ones are SEO, email marketing, ads, content marketing or social. You can’t work in growth and not know how to do at least one of these things. Expertise is also the least important aspect of growth to focus on because it’s easy to learn.

Next is analytics. You must be able to use data to make better decisions. This one is important. You don’t have to master SQL, you can just do everything on Excel but you need to be able to extract data, gather insights, and analyse your own experiments.

Strategy is about being able to come up with good ideas and figuring out which experiments to prioritise. This one is tricky. You have to actually understand your customers and what they’re trying to do. Working with lots of different teams and stakeholders is also key here.

Generally speaking, a good way to think about this is to get really good at one of these skills in the long run, but maintain a baseline in all of them because you can’t do growth work without all three.

Defining your growth model#

A growth model is an answer to the question of how your product grows. You should be able to answer four basic questions: How do you find new users? How do you plan to keep them? How will you make money? How are you going to defend against the competition?

The most common framework for tracking how well you’re performing on each of these questions is Dave McClure’s Pirate Metrics (AARRR). At the moment, I’m focusing on one metric for traffic, one for conversion, one for weekly active usage (so weekly active listeners/drivers/reader, etc). One for retention and one for the 💰.

Every project is unique but the top-level metrics you pick are just a high-level view of your entire funnel. Each metric represents an opportunity to grow your business in a different way. First, figure out what your metrics are. Then walk through the user journey and understand what it all looks like from their perspective.

The best way that I’ve found to map the customer journey is to start at the end and work backwards. What is the ideal place you want customers to end up with your product? Define that and then work backwards, step by step to the very first point of contact.

If you have different types of users, or different end goals then map a different journey for each one. Understanding how all the pieces fit together is an important exercise to do up front but it’s also useful to revisit once a quarter. You never want to lose sight of what everything looks like from the customer’s point of view. The bigger the team, the easier it is to lose track of this.

Quarterly growth planning#

Once you’ve established your top-level metrics and you’ve mapped the customer journey then you can begin quarterly planning.

Most teams set goals on a quarterly basis. 12 weeks is long enough to do something useful but short enough that the end is always in sight.

The first step to coming up with a good 12-week growth plan is to understand the biggest areas of opportunity and the biggest pain points your customers have. It’s not about the features you build, it’s all down to the problems you solve for people.

I always start with the data. Look at your funnel, explore the data, and identify the biggest areas of opportunity. I’d argue that you shouldn’t start a growth team until you have at least a year of data. You need a baseline understanding of what’s working and what’s broken.

Where are the most people falling off your funnel? Are people visiting your website but not converting? Are lots of people converting but few come back for a second purchase?

You need to be able to identify the biggest area of opportunity so you can prioritise things that have the most impact first. Guessing is not the best way to do this.

Once you’ve narrowed in on the bit of the funnel that needs the most love, the idea is to do everything you can to really fine-tune that part of the machine for 12 weeks.

Generally speaking, the sequence for growth planning is to optimise what you have first. Squeeze every ounce out of what’s working before you reach for your next big idea. You improve a metric a little by little, 1% here, 1% there, week over week.

By the end of the quarter, you’ve significantly improved a certain experience or metric. Once you start feeling like you’re hitting a ceiling, you either

look for a radical new way to improve things, or you move onto the next metric and focus on a different area of growth.

As many ideas as possible#

Once you’ve picked an area of opportunity the next step is to get as many ideas on paper as possible. Big ideas, broad ideas, super-specific ones, silly ones, bold new ones, bat-shit-crazy ones – within the context of the problem you’re focusing on, anything goes.

Get as many different teams and perspectives in the room as practical. At the very least you want to make sure you have someone from the user research team and at least one customer success representative.

Trace the user journey together and flag any rough edges. Use best practices, research what other companies are doing as a source of inspiration, if you can speak to other people in the industry and ask them what they did for your focus problem.

Rather than thinking about increasing value for your business, think about how you can improve things for your customers. The worst way to come up with improvements is to focus on the metrics. The idea is to think about this in terms of increasing value for your customers.

People don’t care about your product, understanding and focusing on their problems is a far more effective way to improve your performance. Improve their experience and the byproduct is a bump in your metrics.

No matter how random ideas are there needs to be a rationale: “If we do x, we’ll see an improvement in y metric because of z reason.” The more rooted in data or research you’ve done the better. Structuring ideas like this sets you up to turn them into tangible experiments.

Stack ranking the best ideas#

Figuring out what to work on next is a complicated, multi-stakeholder problem that never goes away.

Look online for how to prioritise stuff and you will run into frameworks RICE, ICE and PIE. The problem with these frameworks is that everything ends up being rated a medium. The great ideas were obvious to begin with, and everything else ends up in the messy middle.

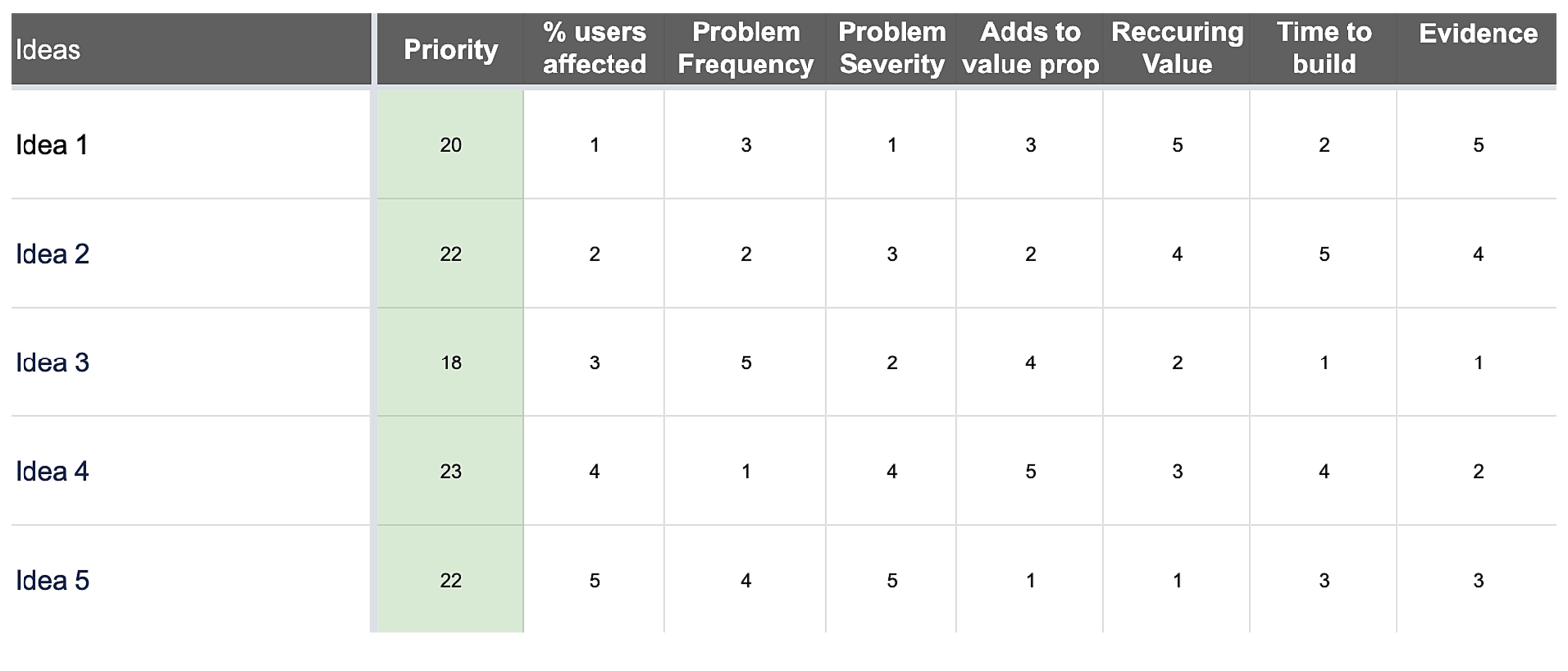

Stack ranking forces you to rank things from best to worse. You can never have two medium ideas, one idea will always get ranked better than the other.

You can also have as many dimensions as you want.

I typically start with:

- The number of people that will be affected

- How often the problem comes up

- How severe the problem is

- How much value the idea adds to our primary value proposition

- How often people will get value from the idea

- How long it will take to build

- How much evidence we have that it will work

Pick 3 to start with, more than 7 and you’re getting into the weeds.

What’s cool about this is that you are only ever comparing options that are in front of you. You’re not asking if something is a good idea, you just looking at whether it’s better or worse than the other options on the table.

You add all the scores up and the ones with the lowest totals are the best. So idea 3 would be the winner in the example below.

The system is far from perfect, but it does tell you why the best idea won. This is important in a team. If I pitch an idea that doesn’t get picked up, I want to know why. The stack shows me what dimensions it did well on and which ideas beat it in other areas. It helps me understand the tradeoffs the decision-maker had to make.

Even if a HIPPO rules out an idea, having a stack makes it easier to ask why. When the reason is a dimension that wasn’t on the board, you can add it to the board as you build up a clearer shared criteria for ranking things in your organisation over time.

Stack ranking is not a silver bullet, but it helps. The bottom line with prioritisation is finding the highest return on investment, you want to work on the ideas that have the highest impact with the least amount of effort first.

Turning them into tangible experiments#

The first step in the experimentation process is designing your experiment. A good experiment has an independent variable, a dependent variable, and it’s based on a clear assumption: “If we do x, we’ll see an improvement in y metric because of z reason.”

Let’s say we want to improve retention for a food delivery app. You see know people who order with a coupon have lower retention than average. We’re training people not to pay full price, so the rationale is they’re less likely to come back for a second full-price meal.

You set up the experiment as an A/B test. You want to start by testing the most basic version of the A/B test. Your dependant variable is the % of users who buy a second full-price meal after using a coupon. The independent variable is whether or not we send them an email.

The next step is to implement and then ship the experiment so you can measure the results. This post is about the mindset around doing this stuff so I’m going to skip over implementation.

We get the results and we see that email has no measurable impact on getting people to come back for a second meal. The experiment did not succeed but now you know that email is not the best approach with this audience.

On the other hand, let’s say 5% more people buy a second meal because of the email. Now you can test on top of this, you try and come up with a better version of the test. You start testing different value propositions in the email content.

The next hypothesis could be that talking about price is more effective than talking about convenience when it comes to ordering a second meal. You A/B test them and whichever one wins is the one you move forward with.

You keep repeating that process and stack wins on top of each other until you you hit diminishing returns. That’s it. You build, then measure, then you repeat the whole cycle with what you learn. And you keep going through experimentation cycles as quickly as possible.

##Links mentioned

- I got accepted to CXL institutes’ growth marketing mini-degree scholarship. The program runs online and covers 112 hours of content over 12 weeks. As part of the scholarship, I have to write an essay about what I learn each week.

- Everything I learned in this post came from two CXL courses by John McBride called Growth mindset: growth vs traditional marketing and Building a growth process.

- This is post 1 in a series. The rest of the posts are listed here.

- If you’d like to get more updates on growth marketing you can follow me on Twitter @joshpitzalis.