Two weeks ago I got accepted to CXL institutes’ conversion optimisation mini-degree scholarship. It claims to be one of the most thorough conversion rate optimisation training programs in the world. The program runs online and covers 74 hours and 37h minutes of content over 12 weeks. As part of the scholarship, I have to write an essay about what I learn each week. This the second week’s report.

AB testing is interesting because it promises objectivity. You run a test, one variation performs better, there is a winner and a loser, people’s opinions no longer matter, life is simple.

But then there is the AA test.

To set up an AA test you run a regular test but with the exact same website in both conditions. AA tests are painful because they force you to acknowledge just how random and meaningless AB test results can be.

To distinguish a result from chance, it needs to be statistically significant. For significance, you need large numbers. Large numbers can either mean working with large amounts of traffic or it can mean large effects. The larger the effect, or improvement, the less traffic you need to detect it.

Working on large improvements means staying away from AB testing things like button colours, font size and grammatical changes. That’s not to say that these micro-changes are not important, only that they’re unlikely to register when you are dealing with small amounts of traffic.

At its core, conversion rate optimisation is about improving products and services for the people who use them. Understanding people want so that you can give it to them. This can result in batching lots of important changes together in a single test.

Batching changes together backfires when you’re working with large amounts of traffic because some things might lead to an improvement in one area and others might lead to problems elsewhere. Not being able to isolate effects is a lot riskier when the cost of a mistake is high. Breaking a large redesign into a series of isolated self-contained changes means keeping the amount of risk on each test contained. On the other hand, a prudent approach like this could take decades with small amounts of traffic.

When improving conversion for a small business with low traffic, the solution is to batch large groups of significant changes together. This means restructuring and clarifying your core value proposition and focusing on addressing fundamental problems for your users. Leap, don’t tip-toe. Rather than using AB testing to fine-tune your website you use it to make that you are leaping in the right direction.

Fixing fundamentals means addressing who your customers are and what they want. There is no other way to do this other than to speak directly to the people who use your product.

You can use pop-up polls, email surveys, one-on-one customer interviews, public reviews and/or live chat interactions (the data your customer support team is already collecting).

First-person user research, specifically the one-on-one interview, is hands down the best way to understand what to focus on. Speaking to people is a subtle process, and I have linked to a book called the Mom Test in the footer, this is the single best resource on the subject. Once you start to understand what the problems are, you can begin to refactor the value your product or service provides.

If you are struggling to get started, I have a set of 20 questions that I go through when I audit a landing page (which I have also linked to in the footer). Best practices are not a substitute for first-person research, they are, at best, a starting point.

To illustrate how best practices can be used as a starting point, I will outline how I audit a website when working with a client.

I want to work on real projects as I go through this 12-week program so I put an offer out to audit and optimise anyone’s landing page for $100 while I am a student. I got a request from a potential client earlier this week. They get about 100 hits a week, but they have a large ticket size so they want to improve their conversion rate.

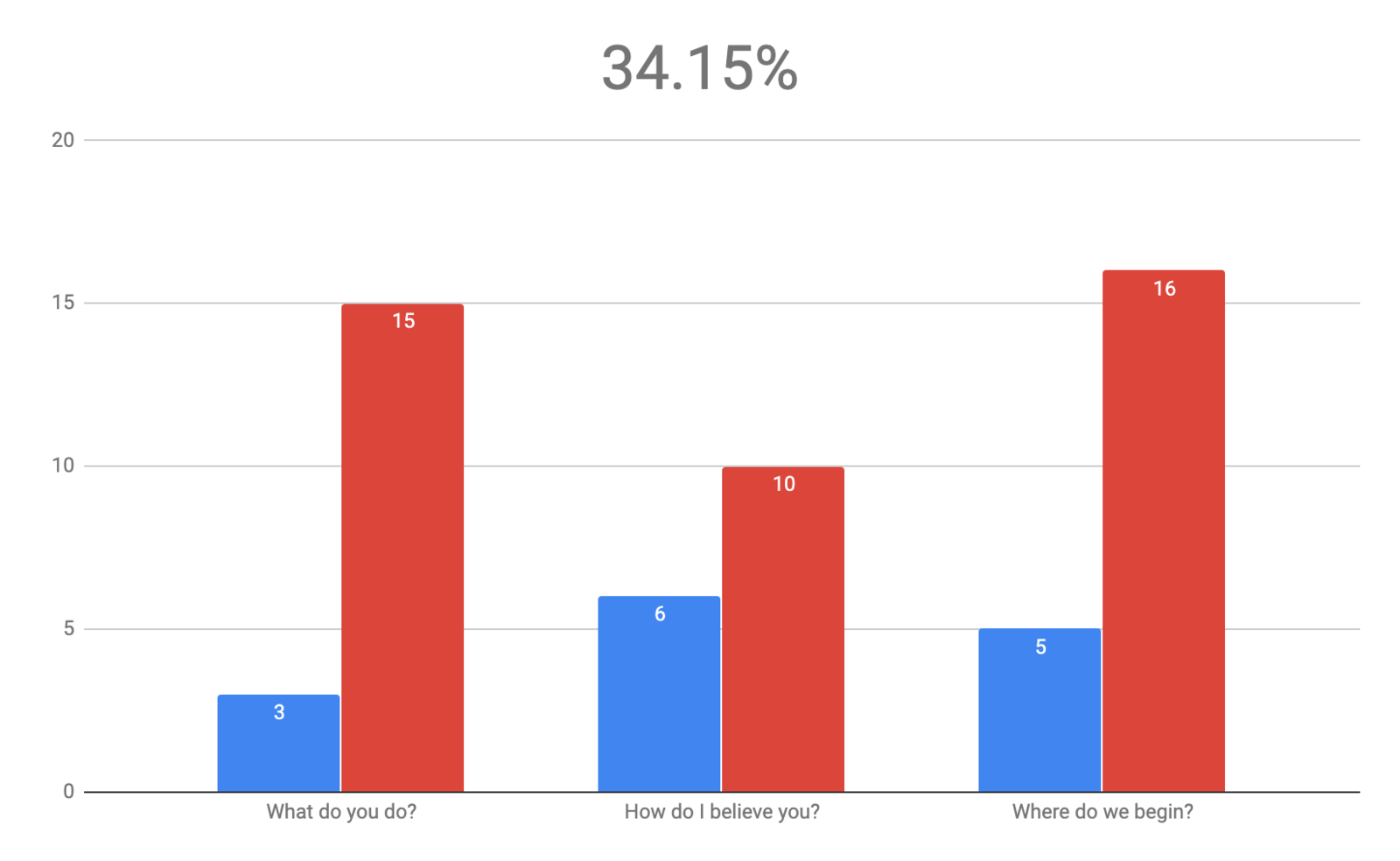

Rather than saying I can’t help, I audited their website to see if I could help. I currently have a 41 point checklist of best practices that is an expanded version of the 20 core questions that I have linked in the footer. I scored the landing to find that it only covered 34% of the best practices I have on my list.

My questions are grouped into three clear themes. The blue bars below are what they are currently doing, the red bars show what they could be doing. The larger difference between them, the more potential improvements I can help with.

Once I’ve established that I can help, the next step is to ask for access to the project analytics (if they have them). This lets me understand where the traffic is coming from, what the current conversion rate is, where bottlenecks exist, and if there are any technical issues with the site (for example, problems specific to a certain browser).

Analytics can show you what is happening but tells you nothing about cause-and-effect. Customer research reveals why relationships exist.

To understand the ‘why’ I asked if we could install a small click poll on the bottom corner of the website. I know only a few people will complete this, but even a few will give us a lot of useful information. What I want to understand is customer intention. Are people just browsing? Are they first time buyers? Do they have a clear understanding of what they want? I need this information to better position the messaging to meet people where they are.

Next, I asked for access to customer support emails from the last 3 months. The idea is to find themes and recurring problems that come up with customer support.

Lastly, I asked if I could reach out to the 10 most recent customers with a request for one-on-one interviews. The goal here is to understand what might have stopped them from buying when they initially considered the product. I want to know what their experience of the product is so far, what they like about it and how their life has changed as a result of it. I am looking for insights but I am also looking for how they articulate these insights. I want to capture exact turns of phrase to use in the messaging. Additionally, if a customer has not left a review then I can formulate one based on quotes from our interview. I then present the review back to the customer and ask if it is ok to share it as a testimonial on the website.

I am aware that sharing customer support transcripts and speaking to recent customers is highly invasive. I am just a student. To address this I am going to present all of my questions for approval first. Then I have suggested we do a mock interview so that my client understands what people will experience before letting me reach out to them.

In addition, or in case I do not get permission, I will be doing a competitor analysis and a heuristic analysis of the website. A heuristic analysis is a subjective assessment, where I would rate the site for things like clarity, security, friction, etc, along with 3-5 other UX professionals.

The more accurate the diagnosis, the more effective the treatment can be. Based on the insights we uncover, I will bundle all the changes into a single treatment that we can test. This test will be purely confirmatory. I am only checking to make sure that the changes don’t underperform the original.

I will do my best to post the results in a case study once complete, but what I am allowed share is at my client’s discretion at this stage.

As a final note, I should also point out that we will be working with lower confidence intervals when working with small amounts of traffic. When a test result has 95% statistical significance, this means is that you are 95% certain that the results did not happen by chance. It is important to understand 95% is arbitrary. We will be lowering this to 80%, which means we need less traffic. The trade-off here is that we are opening up to the slight possibility that the results are a coincidence.

Small businesses redesign their websites without any objective measurement all the time. The only thing people track is the eventual impact it has on the bottom line. Small business redesigns get approved on the basis of team intuition, and founder buy-in, more often than not. Rather than flipping a coin and hoping for the best, it makes sense to take whatever validity you can get with the traffic and timeframe you have.

If you run a small business that doesn’t have lots of traffic, you can (and should) still focus on improving your conversion rate. AB tests are just one aspect of conversion rate optimisation. The other aspect is customer research: this means understanding who your customers are, what they want and how they want it. Use customer research to continuously refactor the value your product provides. You can still use AB testing as a confirmatory process to make sure each significant changes you make is taking you in the right direction, regardless of how much traffic you get.

Links mentioned#

- If you’d like to read more posts about conversion optimisation you can follow me on twitter @joshpitzalis.

- The 20 questions I use to audit a new landing page.

- This is post 2 in a series. The rest of the posts are listed here.

- The Mom Test by Rob Fitzpatrick is the single most useful and informative book I have ever read on doing customer interviews.

- This is the CXL Institute’s conversion rate optimisation program I am currently doing.