I got accepted to CXL institutes’ conversion optimisation mini-degree scholarship. It claims to be one of the most thorough conversion rate optimisation training programs in the world. The program runs online and covers 74 hours and 37h minutes of content over 12 weeks. As part of the scholarship, I have to write an essay about what I learn each week. This is my tenth report.

I decided to learn more about conversion rate optimisation because I appreciate objectivity in my work. Designing and building digital products can be a highly subjective process at times. Everyone has an opinion on what needs to be done, no one knows what will work, and it can all get overwhelming at times.

Seven weeks ago I received a scholarship to the CXL institutes’ conversion optimisation mini-degree. It claims to be one of the most thorough conversion rate optimisation training programs in the world. While I am a long way from mastering the subject, I do have a much clearer sense of what the subject is about, when it’s appropriate and how it works. This post outlines everything I have learned about improving your website conversion rate and why that’s important.

WHAT IS A CONVERSION RATE?#

A conversion rate is just a measurement of how many people in a group do something. If 1000 people visit your website and 20 of them sign up to your mailing list then your mailing list conversion rate is 2% (20/1000 X 100).

Optimising conversion means making improvement to your website so that more people sign up next month. These improvements usually mean changes to the messaging, design or functionality of your website and wider sales funnel. That said, conversion rate optimisation (CRO) is not marketing. It covers the sales funnel after traffic arrives on your site. Finding and driving quality traffic to your website is a marketing problem, conversion is what happens when they arrive. There will certainly be areas of overlap (like technical SEO, or making sure ad design and copy is congruent with the landing messaging) but CRO is not marketing, it’s sales.

WHY BOTHER WITH CONVERSION?#

A low conversion rate means you have a leaky bucket. You can pour as much time and money into advertising and marketing as you want, but if none of your visitors signup or buy your product then it’s all a waste of time.

If you’re investing your time and attention into growing your business, or if you plan to spend a lot of money on paid advertising, then CRO will make sure you waste as little of it as possible.

WHO CARES ABOUT THIS STUFF?#

1000 transactions a month. If you have 1000 transactions a month that’s when you need to start caring about conversion rate optimisation.

A transaction doesn’t have to be a commercial transaction. It can be anything you want to optimise. Want to improve your email signups? You should have about a thousand signups a month before you start to invest serious time and attention into improving your conversion rate.

1000 is just a rule of thumb, I will explain how to work out exactly how many transactions you need later in the post. The point is that if have less than 1000 transactions a month then it will take too long to run statistically reliable tests. No one wants to wait 35 weeks to find out if a certain change to your website has any impact. When you have less than 1000 transactions per month your time and attention are better invested in growth.

Conversion rate optimisation time and dedication. It is expensive work, you have to have skilled people working on this full time, and the tooling is not cheap.

As a result companies with high amounts of traffic and lots of transactions per month care about conversion rate optimisation (CRO). They fall into three broad categories:

Ecommerce – Online stores, especially big ones, have a lot to gain from CRO because a tiny improvement can have an immediate and direct impact on their bottom line.

Software – Attentional software products (like social media platforms and entertainment services) and software as a service companies (like digital tools and productivity platforms) care about CRO because their entire sales funnel and product exist online.

Everyone else – This could be business services, educational institutions, finance and insurance, legal services, travel sites. It’s not so much a type of industry as much as the size of the company. Any business that has a website that crosses 1000 transactions a month will benefit from dedicating attention to their conversion rate.

ALTERNATIVES TO OPTIMISATION#

Conversion rate optimization is a margins game. If you make 1 million dollars a year in recurring annual revenue then a 10% improvement in your conversion rate over the year will result in an additional $100K. It will cost you at least $100K to pay for the salaries of the 2 or 3 people who will be working full time on this pursuit.

Now let’s say you are making 10 million dollars a year, the exact same work will result in an additional million dollars. That will pay for the same salaries 10 times over.

If you don’t have the margins, that 100K will be better spent on people that will help your company grow, rather than optimise.

The problem here is that running statistically reliable experiments requires a lot of data. However, experimentation and A/B testing is just one part of conversion rate optimisation. It also encompases:

- customer research

- good design,

- copy-writing,

- experimentation,

- engineering,

- and a keen understanding of analytics.

I’d argue that covering the fundamentals above is just good product development. You are probably doing this stuff already. If you are not, you should be.

If you cannot run experiments, you can still make intelligent, data driven decisions without the reassurance of testing.

SO WHAT KIND OF RESULTS ARE WE TALKING ABOUT?#

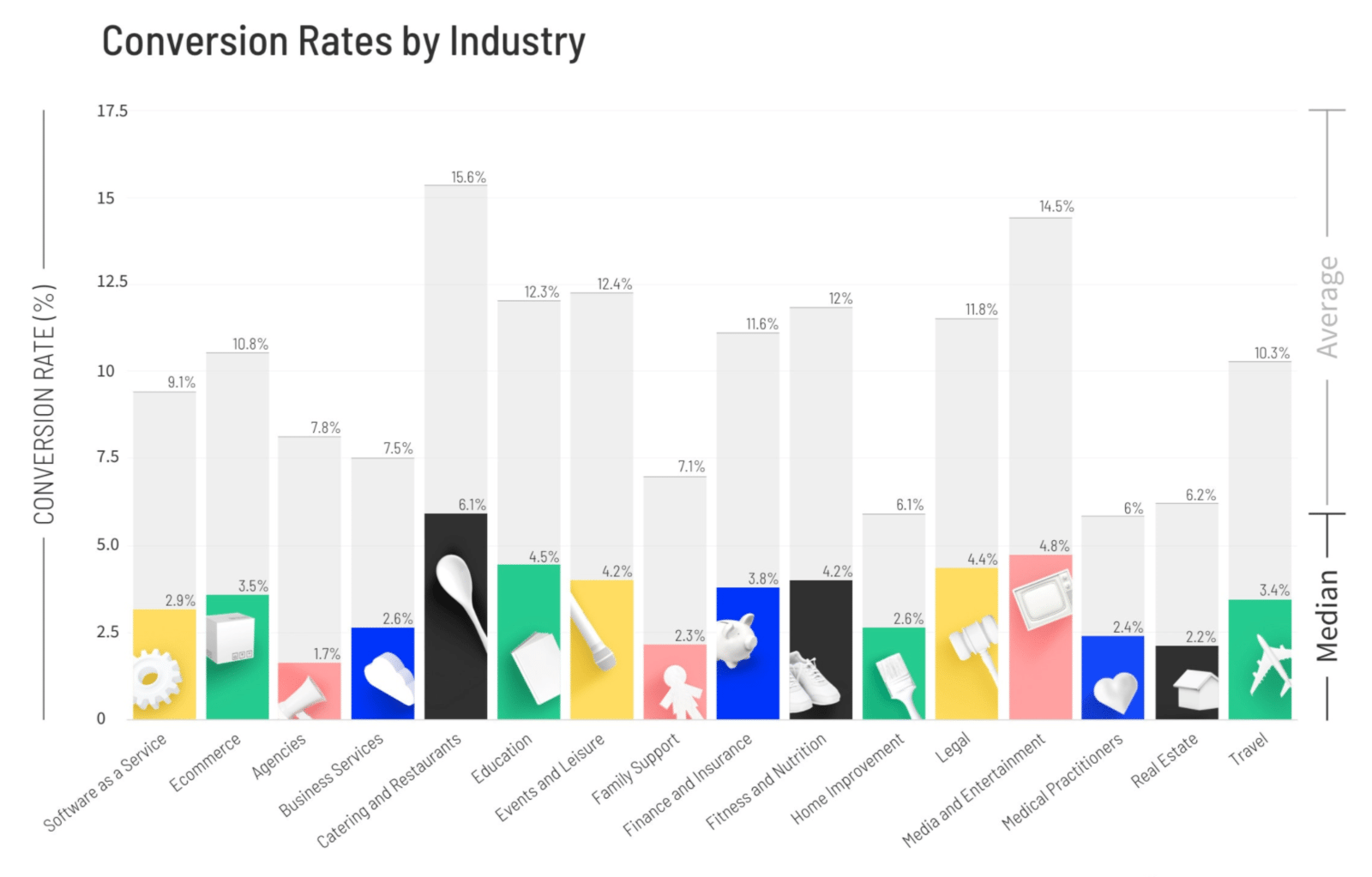

A company called Unbounce analysed 34 thousand landing pages across 16 major industries and found that the median conversion rate for all the landing pages was 3.2%.

This image is taken from the 2020 benchmark report. I will link to it in the footer below so you can explore it in more detail and go over their exact methodology and the insight they have for your specific industry.

It is important to point out that a conversion can mean many things: buying, downloading, subscribing, it could mean a new lead, a phone call, an in-person appointment. So the comparison in the image above is not fair. Still, it does provide a practical overview of what a cross section of industries looks like.

Perhaps the main takeaway from this information should be that if you are currently converting at lower than your median then that should be your first goal. Once you are on par with the industry median you can aim for your industry average. But the top-performers do much better, they had a median conversion rate of 25% (based on the top 25th percentile).

A FRAMEWORK FOR IMPROVING CONVERSION#

At its core, conversion rate optimisation is about improving products and services for the people who use them: Understanding what people want so that you can give it to them.

A/B testing is a valuable tool in this process because narrowing on one what people actually want is hard to predict.

A/B testing is exactly what it sounds like: you have two versions of something (A and B) and a metric that defines success. To determine which version converts better, you drive traffic to both versions and then measure which version was more successful.

This twitter summary of the process sums it up but it leaves out a lot of the more complicated details like, how do we figure out what to test? or at what point are we allowed to call one version more successful than another?

Another way to look at the optimisation process is to think of it as a build-measure-learn cycle:

First You Learn#

Once you’ve established a project’s business goals you start by auditing the product’s current conversion rate to establish a baseline.

Then you begin your research. This broadly falls into two categories:

- looking at what people do and

- listening to what they say.

Analytics tools help you look at group behaviours. User testing and screen tracking tools help you look at individual behaviour. Looking at what people do will tell you what people are doing but it won’t tell you why. To understand the why you must speak to people.

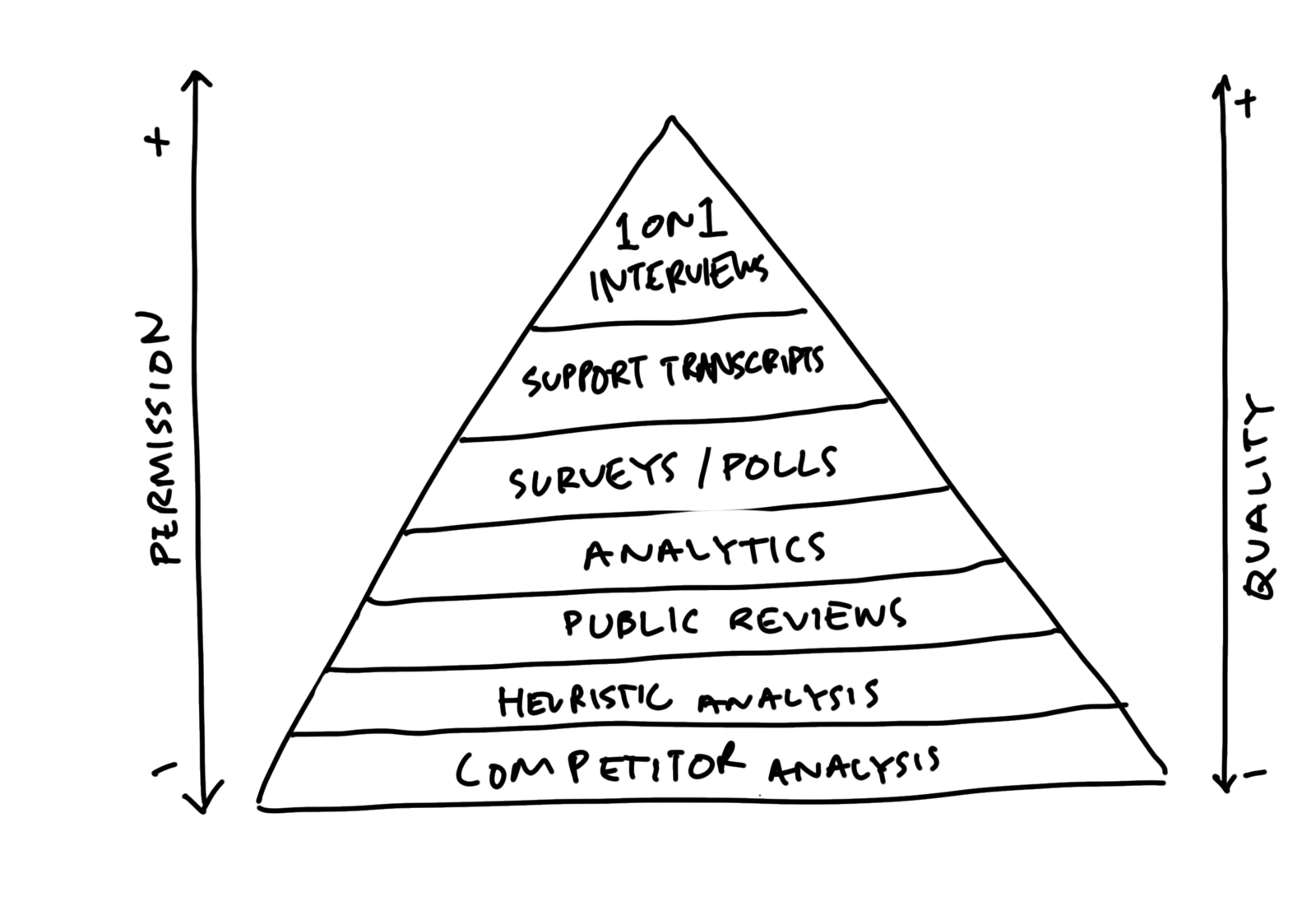

Speaking to people could mean direct customer interviews. It can surveys and polls or you customer support transcripts. It can also just mean going through public review and testimonials. However you get there, understanding individual dynamics and motivations helps you understand the why.

The lower rungs of this pyramid don’t necessitate any involvement from other members on your product team nor do they require any permissions from your customers. The higher up you go the better the richer the information gets, but the more permissions and coordination you need.

Based on your insights, you come up with a list of things you can focus to improve conversion. These best-guesses need to be translated into formal hypotheses so that you can rank and test them.

The idea is to be able to focus on the highest priority changes that will lead to the biggest impact in the shortest period of time with the least difficulty.

Then You Build#

Once you’ve honed in on the hypothesis you want to test you have to actually design the changes, write the copy and/or build the feature.

Finally You Measure#

Once the changes are ready to be implemented it’s time to set up the testing infrastructure and run the tests.

Once the tests are complete, circle back to step one, analyse the results and repeat the process.

One of the easiest mistakes to make when you start AB testing product changes is throwing darts. “Let’s change the colour of these buttons” , “Let’s make the headlines bigger”, “What if we just add emojis everywhere”. That’s not to say that these changes won’t have an impact, it’s just that a more systematic approach to testing will let you narrow in on meaningful changes faster, and you won’t waste a tremendous amount of time and manpower flipping switches.

Improving conversion is largely a diagnostic process. You start with questions, you gather data, have an understanding of what’s going on you try and make the best decisions you can with the evidence you have. Then you measure the result and repeat the process over.

DIFFERENT TYPES OF TESTING#

“A/B testing” has become a general term for all types of testing, but it’s really just one a few approaches. It’s good to know what the different types of testing are, so that you know to pick the right one for the context.

A/B Testing#

A/B testing involves comparing two versions of something. If one versions is better that the other from the original the results show you the magnitude of this difference.

There are a few way to run A/B tests

Most A/B testing tools have editors that make a copy of the original page that you can then tweak. You can edit wording, change colours, you can even add, remove or rearrange elements if you want.

Another way to do A/B testing is called split testing. This works best when you want to test two distinct designs. Instead of making lots of changes in the testing editor you can create a whole new page and then split the traffic between the original and the new page.

One more way to run AB tests is to use feature flags. This is a good approach when you want to A/B test functionality. Coding entire features into an AB testing editor is not practical. With a feature flag you could test two different versions of a certain feature and then measure their impact on an outcome.

Multivariate Testing#

Multivariate testing is similar to A/B testing except that you can test lots of different changes at the same time. The set up is combinatorial, so if you want to test two different types of headlines and two different kind of button designs at the same time it will create 4 permutations to test.

The problem with multivariate testing is that it requires an incredible amount of traffic. If you are struggling to do a basic A/B test then multivariate testing is out of the question.

Multivariate testing can useful once you have already found a winner with a basic A/B test. You can follow a winning test with a multivate test that explores all the minor changes that were not important enough to test in isolation.

The Bandit Test#

Bandit testing is similar to A/B testing except that it splits the traffic dynamically. When a variation starts to perform better, the test will drive more traffic to that variation. A bandit test is good when the result is more important than the information.

Let’s say you are selling an ebook and you can either give away a free chapter ( and capture people’s email address to drive a pre-sale campaign) or you can push for pre-orders on the landing page. You could test all three permutations with a bandit test. If capturing email and pushing for pre-orders together turns out to be the most effective strategy then the test will push more and more traffic to that variation.

Existence Testing#

Existence testing is like multivariate testing except that you are removing elements rather than testing variations. If removing an entire element has no impact on the conversion rate then it probably doesn’t need to be there. Existence testing is used to build up an understanding of what the salient features in a system are and what their relative impact is.

So far I have covered what conversion rate optimisation is, why it is important and who it is important for. I have also shared a framework for thinking about the conversion process and the different types of tests there are. Now let’s look at how to calculate your conversion rate, how long you need to run tests for, and ideas you can test to get started.

CALCULATING YOUR CURRENT CONVERSION RATE#

Before you can track any kind of conversion you have to figure out what it is you want people to do. News sites want people to read articles, Facebook wants you to spend as much time as humanly possible on their site, a lot of sites just want you to sign up to their mailing list. For most Saas businesses the goal of the landing page is to get people to sign up or start a free trial.

Google Analytics can help you measure how well you convert traffic coming to your website. If you want to measure how well people convert within your application, or through a mailing list campaign, there are better tools. I’m going to cover Google analytics is by far the most ubiquitous way to measure your traffic conversion analytics.

You are allowed to track 20 goals in Google Analytics. To keep things simple I recommend just starting with your single most important goal.

To set up a goal in Analytics you must go to the admin section, and then click on the goals in the view column, and then click on the red ‘create goal’ button. If you want to track people filling out a registration form then you will need to set up an event goal so that you can track the registration form submission event. You will need to go into the form in your landing page source code and trigger the event on submit. Depending on how you have analytics setup, you will need to do this with Google Tag Manager or the Google Analytics event trigger.

When you fire the event you will need to define an event category and an event action. The category is the broad grouping of the event, so ‘sign-up’ or ‘registration’ or something like that. The action is the specific behaviour you are tracking, for example ‘form submission’. Labels and values are optional. If you have multiple signup forms you can use a label to add info about which form fired the event. If the signup is paid you can also allocate a dollar value to the action. These last two are optional.

You must set up a goal in order to track your conversion rate. If event goals sound complicated, a simpler alternative is to set up a destination goal. This tracks when people land on a specific page. To do this you must create a page that people can only reach after completing your goal. You simply add the URL of the destination page to the goal and you are done. This is often why people direct you to a thank you page when you download something online.

You could also have a welcome page that people only visit once after they sign up. However, you must make sure people don’t see the welcome page every time they login otherwise it will skew your metrics. This is why, for signups, event goals make more sense. There are two other types of goals but those are more suited for blogs and content-focused websites so I won’t go into those.

Once you have set up your goal, the source/medium page from before will have a whole new section appended to the end of it called Conversion. This page now has three groups of column, acquisition, behaviour and conversion.

The acquisition column tells you how much traffic is coming from each of your sources. This is what almost everyone uses Analytics for.

The behaviour column tells you how engaged a source of traffic is. There are three sub-columns, you have the bounce rate (the percentage of people that view a page once, do nothing else and then leave), pages per session and the average session duration. These three columns help me assess the quality of my traffic. The final column is Conversion, this shows you the result of all this traffic and engagement.

There are lots of other things you can do with the conversion section. You can set up multiple goals, you can have multi-channel goals, you can even set up neat visualisations to see where people drop out of your sales funnel but I don’t recommend diving into all that until you need to.

IMPLEMENTING YOUR TEST#

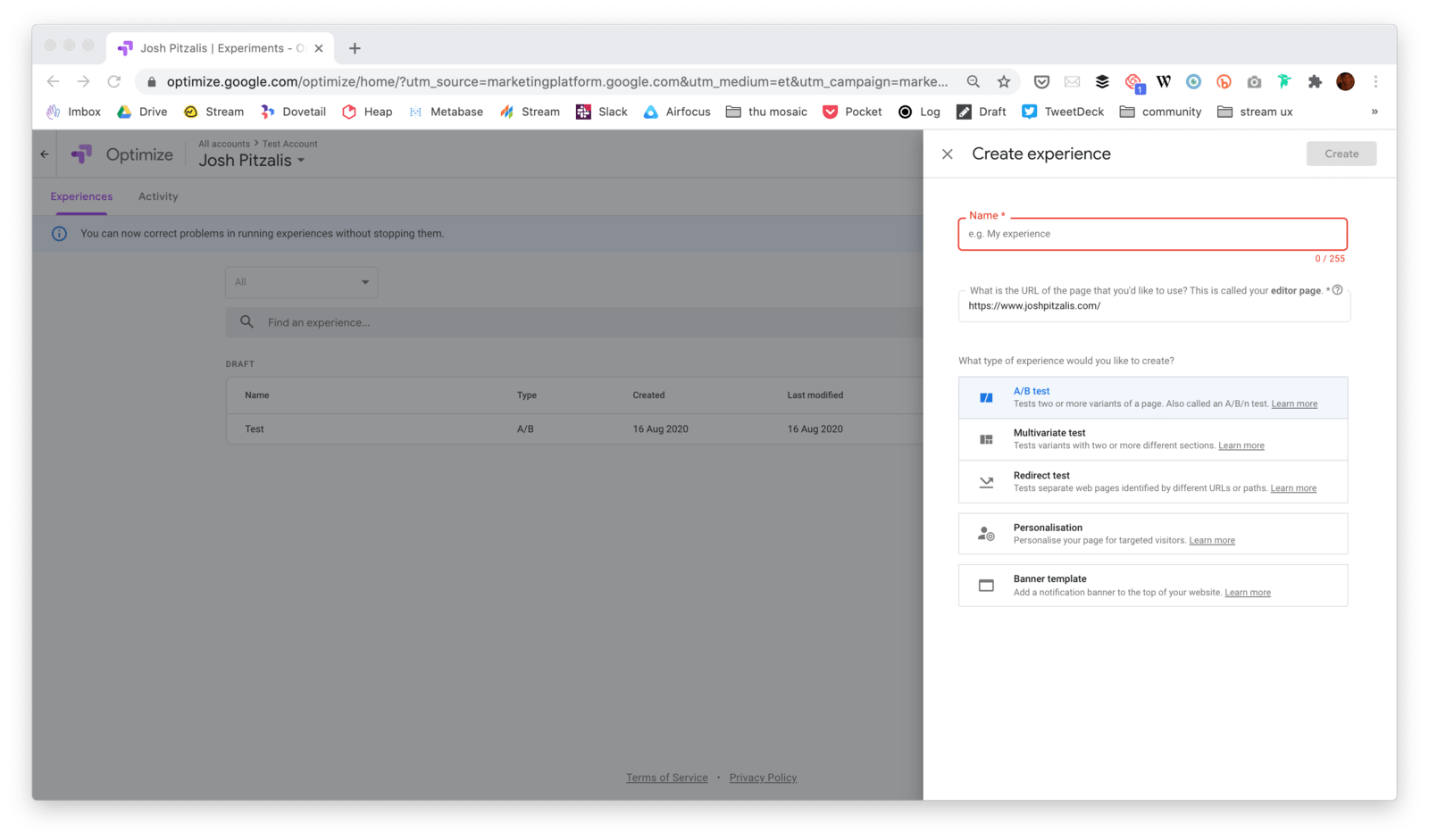

If you are measuring traffic to your website with Google Analytics then you can use another product called Google Optimise to run AB tests. Google optimise involves creating an account to get started. Once you are set up, you can create your first optimisation experience.

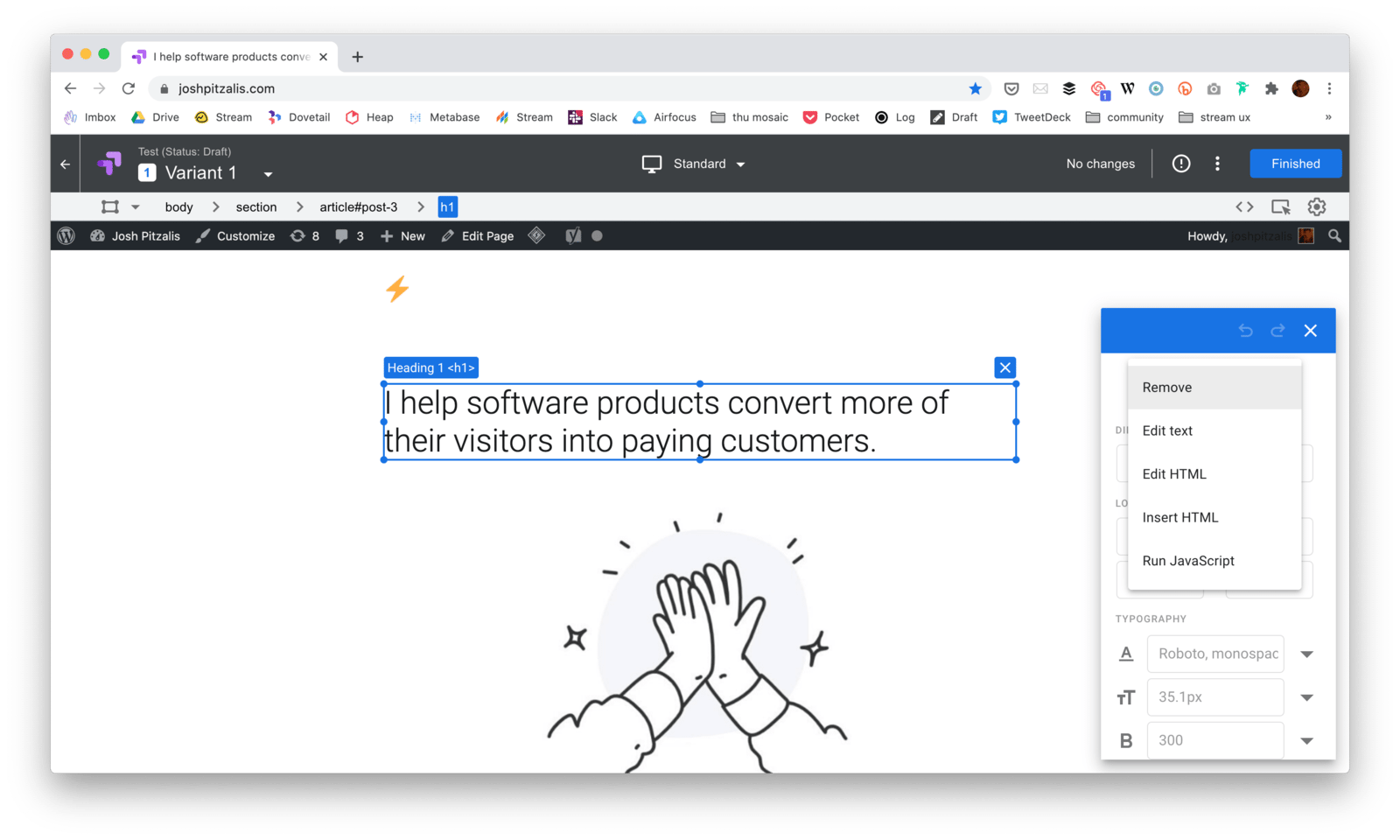

You give the test a name, add the URL to the website you want to test and pick the type of test you want to do. Your options are an AB test, a multivariate test, or a split test (I have added a link to part 1 of this article in the footer that explains the difference between these).

Setting up a test involves adding at least one variant. A variant is just a version of your website with some changes applied to it. If you want to test a version of your website with green text and another with red text then you would set up one green text variant and another red text variant.

If you decide to do an AB test ( as opposed to a split test) the adding a variant lets you edit the original site in an editor. You can click on headers or paragraphs and change what they say without touching any website code. You can change CSS, add or remove HTML elements, or even add snippets of javascript. The editor is great when you want to make small tweaks to a site. Of you want to make large changes then it probably easier to set up a new website with all the changes and run a split test instead. That way half of your users will see the original site and the other half will the see the new site you have worked on.

Once you are finished you will need to link google analytics to the Optimize account so that it can keep track of where traffic goes.

Finally, you will need to define the goal of your experiment. By default you will be able to measure time on a page, the bounce rate and pages visited. You also have the option to define custom events. This is where you can add an event on your site for form submissions, or a download event, or whatever else you want to track. Once you have defined the goal, you are ready to start the experiment.

Optimise will give you the option to check that your site is properly configured before going live. Configuration means adding an Optimize javascript tag to your site (in addition to analytics tag you already have installed) and an anti-flicker snippet. Google provides links to both during the setup process. Once installed you can run the setup test to make sure your site is working correctly before launching the experiment.

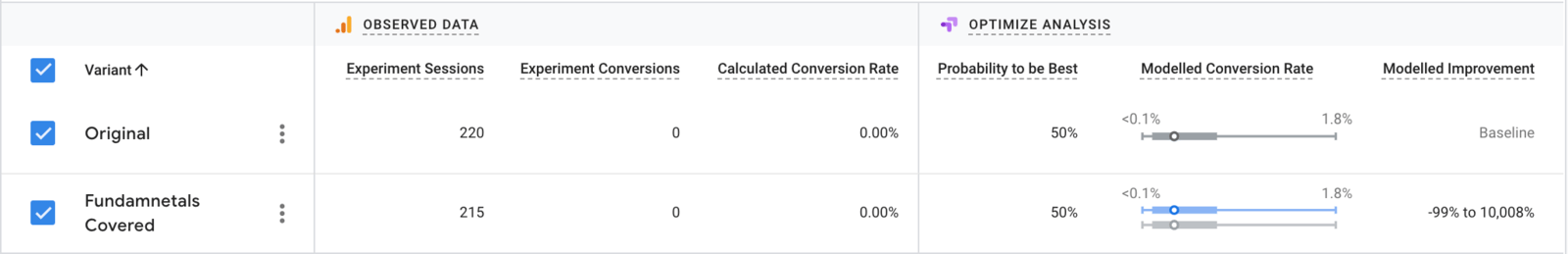

When a test is live you will get a result table like the one above. This shows you how many people are going to each version of the site and how many have converted in each version. I have 0 conversions in the example above so the probability that one version is better than the other is between -99% and 10,008%. As conversions start to come in, this probability starts to narrow until it becomes practical.

SO HOW LONG YOU NEED TO RUN A TEST FOR?#

Imagine you have two dice and you want to check if one is weighted. You roll both dice 10 times. One die seems to be random, the other lands on 3 for more than half of the rolls.

If you check for significance, you’ll get what looks like a statistically significant result. If you stop now and you will think one die is heavily weighted. This is how ending a test early can lead to a significant, but inaccurate, result.

The reality is that 10 rolls is just not enough data for a meaningful conclusion. The chance of false positives is too high with such a small sample size. If you keep rolling, you will see that the dice are both balanced and there is no statistically significant difference between them.

The best way to avoid this problem is to decide on your sample size in advance and wait until the experiment is over before you start believing the results your Google Optimize gives you.

Let’s say you have a website that gets about 1000 hits of traffic a month. About 3% of the people who visit your website sign up to your mailing list. An industry-leading competitor published a case study where adding emojis to their sign up button improved signups.

You want to try emojis out on your website. So you hire someone to set up two versions of your website, the original one, and then a new one with emojis. You A/B test the difference by driving half of your traffic to one side and half to the other. Then you wait to see how many people sign up to the mailing list in each version. After a week, 3 people have signed up through the original and 5 signed up through the emoji version. Clearly, the new variation is an improvement, there is no need to continue testing, now you start driving 100% of the traffic to the new site.

Wrong.

Before you start your test you must calculate how long the test needs to run for before you can call it a success. You plug your data into a ab test duration calculator and it tells you that you need to run the test for 8 weeks.

The actual number for your test depends on your current conversion rate, the size of the change you are trying to detect and how much traffic you get. If you have these variables then this 2 minutes video will show you how to calculate exactly much traffic you need for your site:

I will add another link in the footer showing you how to use the simplest ab test duration calculator I have managed to find.

If you are not convinced that you need to calculate your tests in advance then I recommend running an AA test before you start experimenting.

To set up an AA test you run a regular test but with the exact same website in both conditions. AA tests are painful because they force you to acknowledge just how random and meaningless AB test results can be.

Always calculate your test duration before you start a test.

WHAT DO I TEST THEN?#

What you test is contextual to your project. There is no standard suite of things to test, you need to think about what your product does, who it’s for, and where there is room from improvements path users take to experience the value you offer them.

That said, people of the internet have been building landing pages for a while now and have established a pattern that works. If you are not following these patterns it might be worth running test to see if they can help you improve your conversion rate. Here are 20 questions I ask myself when auditing a landing page.

What does your product do?

- Could a child understand what your product does, based on your headline?

- Does your headline clarify who your product is for?

- Does you byline explain how your product does what it claims to do in ten words or less?

- Does your landing page contain a screenshot, demo or sample of what is being offered?

Why should I care?

- Does your landing page explicitly explain what your product can do for your customer? So benefits in the first paragraph, not features.

- Does the copy clearly explain what advantages your product has over other existing solutions?

- Do you offer anything for free on your landing page?

How do I believe you?

- Does your landing page show proof of benefits (such as case studies) that you promise?

- Do you prominently display icons/images to assure the safety and security of data?

- Does your landing page contain testimonials (or logos) from existing customers?

- Is your landing page on HTTPS?

- Does your landing page offer a guarantee or refund?

- Does your landing page address the most common objections for people?

Where do we begin?

- Does your landing page contain a single offer for visitors to choose from? If you have multiple offers, consider building multiple landing pages so each one is relevant and targeted.

- Is your call-to-action, headline and core benefit located close to each other? Try guiding the visitor by placing important persuasive elements next to each other on the landing page.

- Is your call-to-action above the page fold? To avoid the case of visitors not scrolling down, try moving your call-to-action above the page fold.

- Your call-to-action is obvious twenty steps away from the screen (size, colour, contrast)?

- Does your call-to-action start with a verb and describe what will happen next ( Start trial, See pricing, Join waiting list)? Persuasive text on call-to-action makes it easier for the visitor to think of a reason to click on it.

- Does your landing page have less than three outbound links? Ideally, a landing page should have no external links so that a visitor does not get distracted. -Is your landing page a single-page experience? Single-step landing pages work much better as compared to multi-step landing pages.

The goal here is not to be able to answer YES to all of these questions. The goal is to use these best practices as a starting point for your AB tests. I must emphasise that collecting data on how your customers use your product is the best way to inform what you should test. These questions are only a fallback if you don’t have enough data or if you can’t find any obvious bottlenecks.

Improving conversion is largely a diagnostic process. Diagnostic information typically comes from three places:

- Analytics

- User Testing

- Interviews

Analytics can include all the data you get from Google Analytics or a similar platform. How long people are spending on your site, goal completion rates, where they are dropping off, that kind of stuff. Then there is the more functional aspect to analytical data, like page speed problems, bugs associated with different browsers, server downtime, etc. Broadly speaking, analytical data is about observing what people do in aggregate.

Then there’s user testing. User testing is about observing what individual people do. This can be done in-person or remotely. You give people specific tasks to complete and then watch how well they do and where they get stuck.

Finally, there is qualitative research. This encompasses things like pop-up polls, email surveys, customer interviews, public reviews and live chat interactions (the data your customer support team is already collecting). The best kind of qualitative research comes from speaking to your customers and listening to what they say. Customer interviews won’t solve all your problems but it will help you understand what better means to the people who use your product.

To summarise, I had a look at what conversion rate optimisation means, I went over what kind of results you can expect from an optimisation program, a framework for thinking about conversion research and the different types of tests there are. Then I covered how to calculate your conversion rate and how long you need to run tests for and went over some ideas to get started.

I hope you found this useful and can use the information to start running experiments to improve your conversion. If you get stuck or have questions the best place to reach out to me is on twitter @joshpitzalis.

Links#

- Here is the Unbounce 20202 Benchmark report I mentioned https://unbounce.com/conversion-benchmark-report/

- Vlad Malik’s magnificent reverse sample size calculator.

- Google Optimize https://marketingplatform.google.com/about/optimize/

- A helpful YouTube series to get started with Optimize

- An introductory article on how to do customer interviews

- An introductory article on how to do user testing

- This is post 11 in a series. The rest of the posts are listed here.

- This is the CXL Institute’s conversion rate optimisation program